- July 26, 2024

-

-

Loading

Loading

Chatter over artificial intelligence and ChatGPT, in some quarters, is hitting a fever pitch. Uses are seemingly infinite. So too are the worries, and pitfalls, from legal to ethical to simply understanding the applications.

The Business Observer tackled the challenges — and opportunities — from multiple angles.

Artificial intelligence, or AI, is a “wide-ranging branch of computer science (that) can build smart machines capable of performing tasks that typically require human intelligence,” according to Built In, an online community for startups and tech companies. “AI allows machines to model, or even improve upon, the capabilities of the human mind. And from the development of self-driving cars to the proliferation of generative AI tools like ChatGPT and Google’s Bard, AI is increasingly becoming part of everyday life. AI can be divided into four categories, based on the type and complexity of the tasks a system is able to perform. They are reactive machines; limited memory; theory of mind; and self awareness.”

ChatGPT is an artificial intelligence chatbot developed by OpenAI and released in November 2022, according to Google and Wikipedia.

The name ChatGPT combines Chat, referring to its chatbot functionality, and GPT, which stands for Generative Pre-trained Transformer, a type of large language model. Microsoft is a partner with ChatGPT, having invested in it since 2019.

ChatGPT, according to its home page on OpenAI, “interacts in a conversational way. The dialogue format makes it possible for ChatGPT to answer follow-up questions, admit its mistakes, challenge incorrect premises and reject inappropriate requests.”

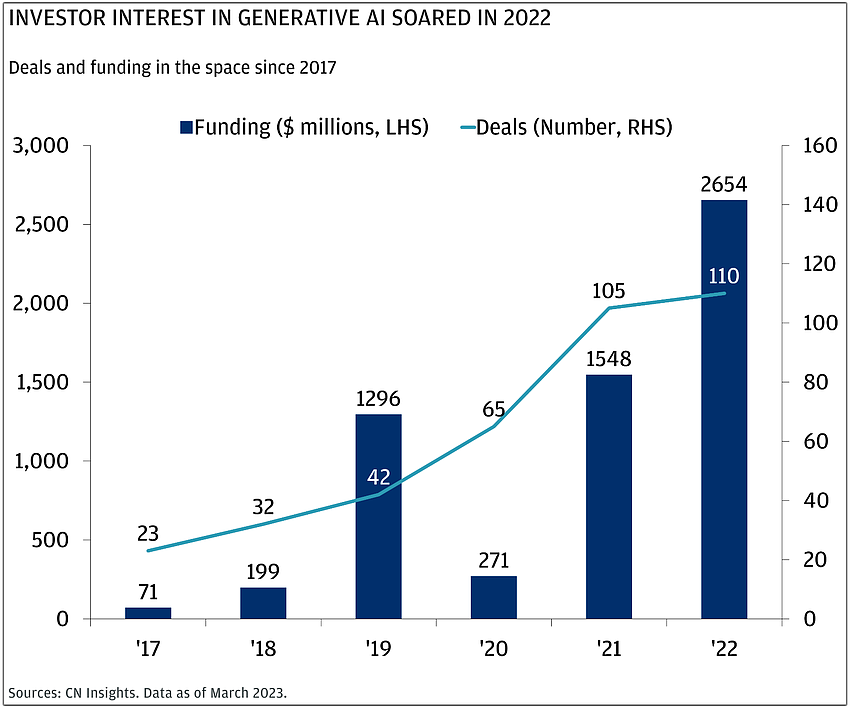

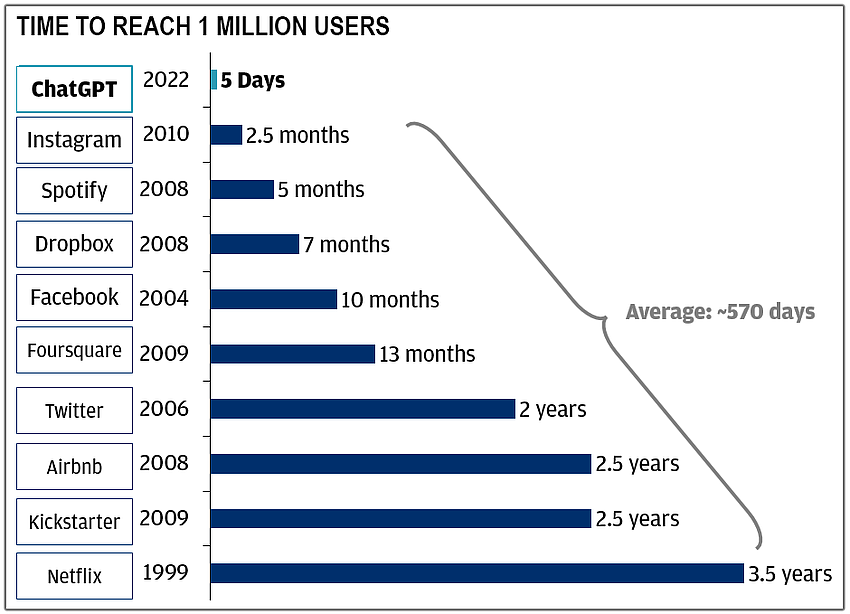

ChatGPT surpassed 1 million users in its first five days and 100 million users by January 2023, according to an April 26 report from J.P. Morgan. (J.P. Morgan Chairman and CEO Jamie Dimon, in the bank giant’s annual shareholder letter, said “AI will be critical to our company’s future success. … The importance of implementing new technologies cannot be overstated.”

ChatGPT has already passed:

Source: J.P. Morgan

Early on, some worried AI and ChatGPT’s uber-advanced technology could lead to cheating in high school and college, and other ethical concerns. More recently, a wide swath of global technology leaders, including OpenAI CEO Sam Altman, have raised deeper concerns about the uses of the technology. In March, to cite one major example, Elon Musk led a call for a six-month pause on the technology, citing risks to society. (That pause wasn't taken.)

Then, on May 30, dozens of tech industry leaders signed a short letter on the risks. “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war,” stated the note, posted on the Center for AI Safety website. Altman signed the letter, in addition to executives from Google's DeepMind AI unit and Microsoft. Bill Gates signed the note, too.